Sometimes, even as a tech reporter, you can be caught out by how quickly technology improves. Case in point: it was only today that I learned that my iPhone has been offering a feature I’ve long desired — the ability to identify plants and flowers from just a photo.

It’s true that various third-party apps have offered this function for years, but last time I tried them I was disappointed by their speed and accuracy. And, yes, there’s Google Lens and Snapchat Scan, but it’s always less convenient to open up an app I wouldn’t otherwise use.

But, since the introduction of iOS 15 last September, Apple has offered its own version of this visual search feature. It’s called Visual Look Up, and it’s pretty damn good.

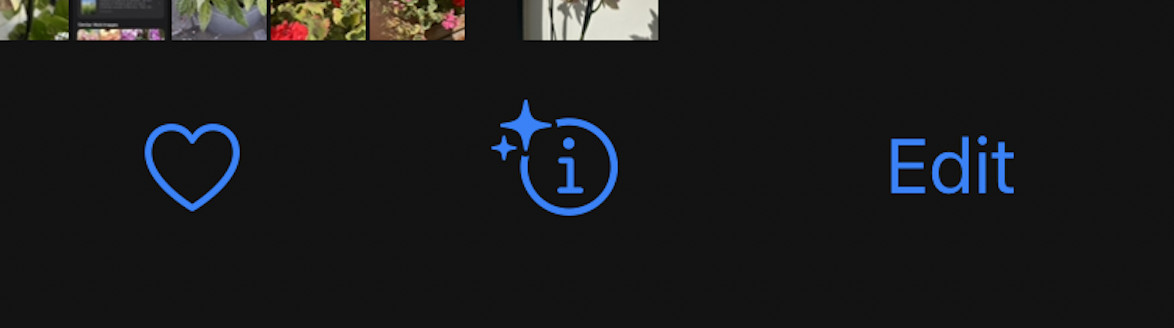

It works very simply. Just open up a photo or screenshot in the Photos app and look for the blue “i” icon underneath. If it has a little sparkly ring around it, then iOS has found something in the photo it can identify using machine learning. Tap the icon, then click “Look Up” and it’ll try and dredge up some useful information.

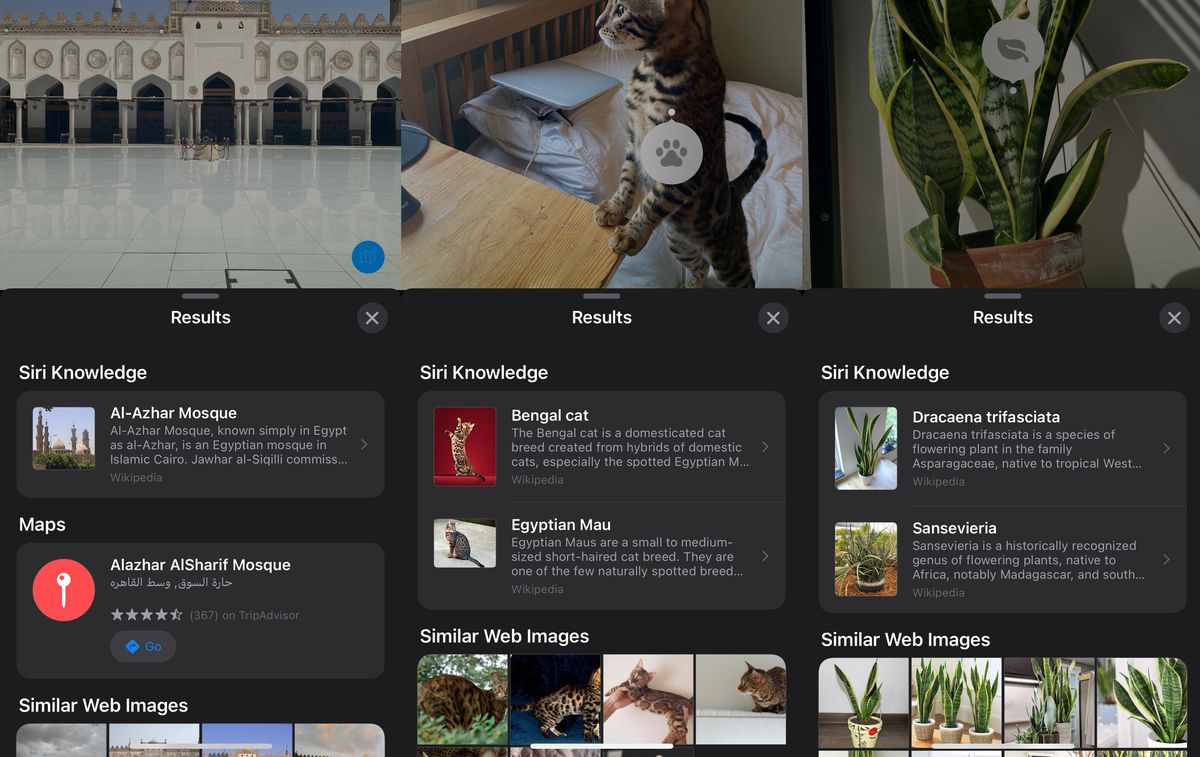

It doesn’t just work for plants and flowers, either, but for landmarks, art, pets, and “other objects.” It’s not perfect, of course, but it’s surprised me more times than it’s let me down. Here are some more examples just from my camera roll:

Although Apple announced this feature last year at WWDC, it hasn’t exactly been trumpeting its availability. (I spotted it via a link in one of my favorite tech newsletters, The Overspill.) Even the official support page for Visual Look Up gives mixed messages, telling you in one place it’s “U.S. only” then listing other compatible regions on a different page.

Visual Look Up is still limited in its availability, but access has expanded since launch. It’s now available in English in the US, Australia, Canada, UK, Singapore, and Indonesia; in French in France; in German in Germany; in Italian in Italy; and in Spanish in Spain, Mexico, and the US.

It’s a great feature, but it’s also got me wondering what else visual search could do. Imagine snapping a picture of your new houseplant, for example, only for Siri to ask “want me to set up reminders for a watering schedule?” — or, if you take a picture of a landmark on holiday, for Siri to search the web to find opening hours and where to buy tickets.

I learned long ago that it’s foolish to pin your hopes on Siri doing anything too advanced. But these are the sorts of features we might eventually get with future AR or VR headsets. Let’s hope if Apple does introduce this sort of functionality, it makes a bigger splash.