Apple’s software is very good, generally speaking. Even as the company has spread its focus among more platforms than ever — macOS and iOS and iPadOS and tvOS and watchOS and whatever software Apple’s building for its maybe-possibly-coming-someday car and its almost-certainly-coming-soon AR / VR headset — those platforms have continued to be excellent. It’s been a while since we got an Apple Maps-style fiasco; the biggest mistakes Apple makes now are much more on the level of putting the Safari URL bar on the wrong part of the screen.

What all that success and maturity breeds, though, is a sense that Apple’s software is… finished — or at least very close. Over the last couple of years, the company’s software announcements at WWDC have been almost exclusively iterative and additive, with few big swings. Last year’s big iOS announcements, for instance, were some quality-of-life improvements to FaceTime and some new kinds of ID that work in Apple Wallet. Otherwise, Apple mostly just rolled out new settings menus: new controls for notifications, Focus mode settings, privacy tools — that sort of thing.

This is not a bad thing! Neither is the fact that Apple is the best fast-follower in the software business, remarkably quick to adapt and polish everybody else’s new ideas about software. Apple’s devices are as feature-filled, long-lasting, stable, and usable as anything you’ll find anywhere. Too many companies try to reinvent everything all the time for no reason and end up creating problems where they didn’t exist. Apple is nothing if not a ruthlessly efficient machine, and that machine is hard at work honing every pixel its devices create.

But we’re at an inflection point in technology that will demand more from Apple. It’s now fairly clear that AR and VR are Apple’s next big thing, the next supposedly earth-shakingly huge industry after the smartphone. Apple’s not likely to show off a headset at WWDC, but as augmented and virtual reality come to more of our lives, everything about how we experience and interact with technology is going to have to change.

Apple has been showing off AR for years, of course. But all it’s shown are demos, things you can see or do on the other side of the camera. We’ve seen very little from the company about how it thinks AR devices are going to work and how we’re going to use them. The company that loves raving about its input devices is going to need a few new ones and a new software paradigm to match. That’s what we’re going to see this year at WWDC.

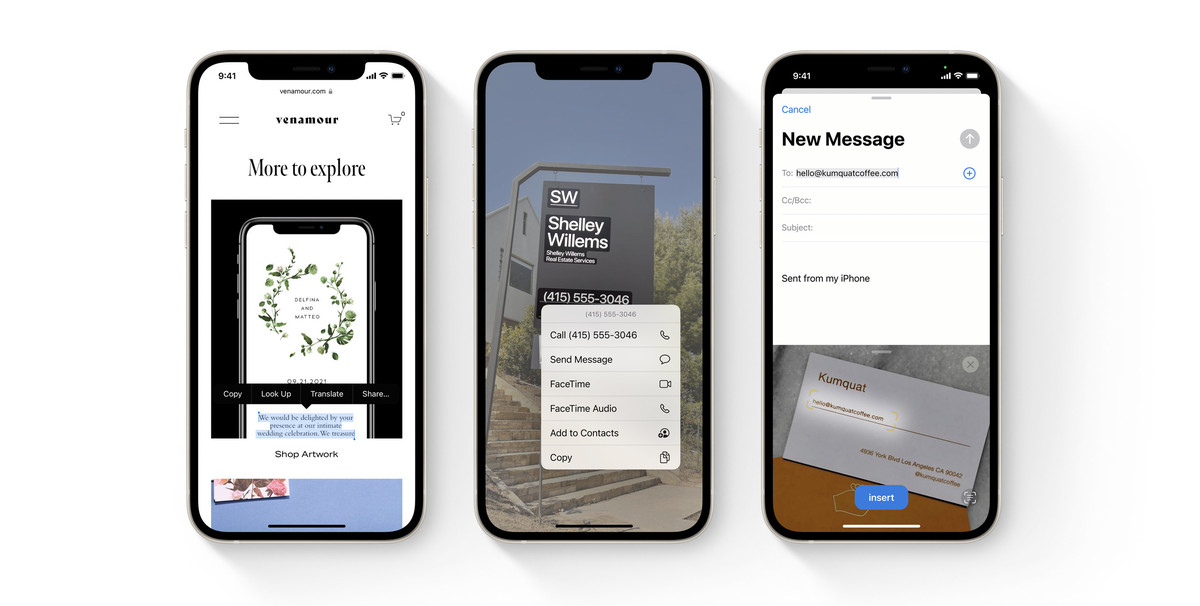

Remember last year, when Apple showed that you could take a picture of a piece of paper with your iPhone and it would automatically scan and recognize any text on the page? Live Text is an AR feature through and through: it’s a way of using your phone’s camera and AI to understand and catalog information in the real world. The whole tech industry thinks that’s the future — that’s what Google’s doing with Maps and Lens and what Snapchat is doing with its lenses and filters. Apple needs a lot more where Live Text came from.

From a simple UI perspective, one thing AR will require is a much more efficient system for getting information and accomplishing tasks. Nobody’s going to wear AR glasses that send them Apple Music ads and news notifications every six minutes, right? And full-screen apps that demand your singular attention are increasingly going to be a way of the past.

We may get some hints about what that will look like: it sounds like “use your phone without getting lost in your phone” is going to be a theme at this year’s WWDC. According to Bloomberg’s Mark Gurman, we could see an iOS lock screen that shows useful information without requiring you to unlock your phone. A more glanceable iPhone seems like an excellent idea and a good way to keep people from opening their phone to check the weather only to find themselves deep down a TikTok hole three and a half hours later. Same goes for the rumored “interactive widgets,” which would let you do basic tasks without having to open an app. And, if Focus mode gets some rumored enhancements — and especially if Apple can make Focus mode easier to set up and use — it could be a really useful tool on your phone and a totally essential one on your AR glasses.

AR will demand software that does more but also gets out of the way more

I’d also expect Apple to continue to bring its devices much closer together in terms of both what they do and how they do it in an effort to make its whole ecosystem more usable. With a nearly full line of Macs and iPads running on Apple’s M chip — and maybe a full line after WWDC if the long-awaited Mac Pro finally appears — there’s no reason for the devices not to share more DNA. Universal Control, which was probably the most exciting iOS 15 announcement even if it didn’t ship until February, is a good example of what it looks like for Apple to treat its many screens as part of an ecosystem. If iOS 16 brings true freeform multitasking to the iPad (and boy, I hope it does), an iPad in a keyboard dock is basically a Mac. Apple used to avoid that closeness; now, it appears to be embracing it. And, if it sees all these devices as ultimately companions and accessories to a pair of AR glasses, it’ll need them all to do the job well.

The last time Apple — hell, the last time anyone — had a truly new idea about how we use gadgets was in 2007 when the iPhone launched. Since then, the industry has been on a yes-and path, improving and tweaking without ever really breaking from the basics of multitouch. But AR is going to break all of that. It can’t work otherwise. That’s why companies are working on neural interfaces, trying to perfect gesture control, and trying to figure out how to display everything from translated text to maps and games on a tiny screen in front of your face. Meta is already shipping and selling its best ideas; Google’s are coming out in the form of Lens features and sizzle videos. Now, Apple needs to start showing the world how it thinks an AR future works. Headset or no headset, that will be the story of WWDC 2022.