On Tuesday morning, Apple announced a new wave of accessibility features for its various computing platforms, which are set to roll out later this year as software updates for the iPhone, iPad, Mac, and Apple Watch.

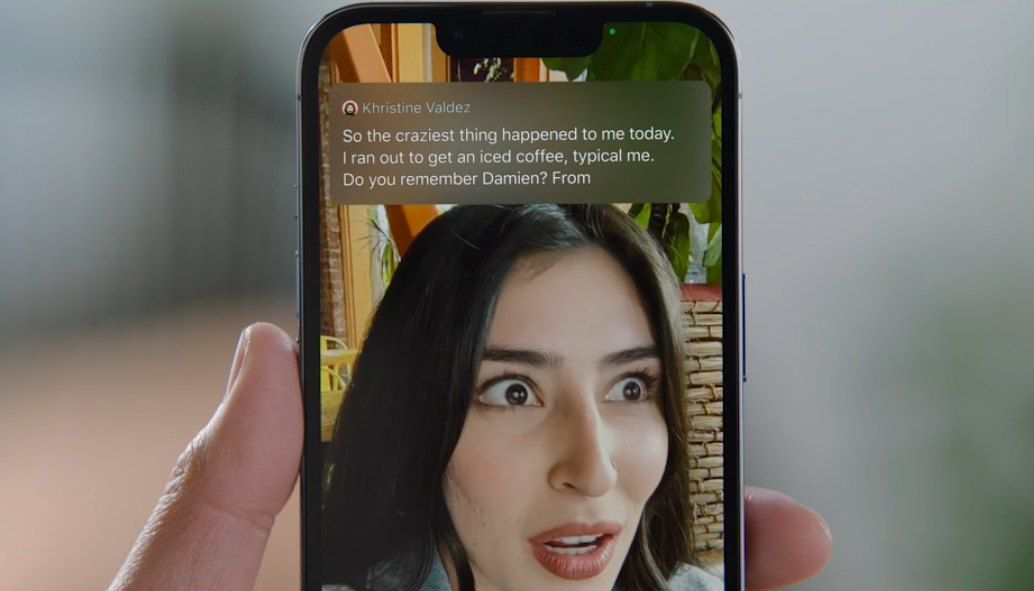

Apple said it will beta test Live Captions that can transcribe any audio content — FaceTime calls, video conferencing apps (with auto attribution to identify the speaker), streaming video, or in-person conversations — in English across the iPhone, iPad, and Mac. Google’s push for Live Caption features began around the release of Android 10 and are now available in English on the Pixel 2 and later devices, as well as “select” other Android phones and in additional languages for the Pixel 6 and Pixel 6 Pro. So it’s good to see the Apple ecosystem catching up and bringing it to even more people.

Like Android’s implementation, Apple says its captions will be generated on the user’s devices, keeping information private. The beta will launch later this year in the US and Canada for iPhone 11 and later, iPads with the A12 Bionic CPU and later, and Macs with Apple Silicon CPUs.

The Apple Watch will expand the Assistive Touch gesture recognition controls it added last year with Quick Actions that recognize a double pinch to end a call, dismiss notifications, take a picture, pause / play media, or start a workout. To learn more about what the gesture controls already do and how they work, we explain more about how to use your Apple Watch hands-free right here.

The Apple Watch is also getting easier to use for people with physical and motor disabilities with a new mirroring feature that will add remote control from a paired iPhone. Apple Watch Mirroring includes tech pulled from AirPlay, making it easier to access unique features of the Watch without relying specifically on your ability to tap on its tiny screen or what voice controls can enable.

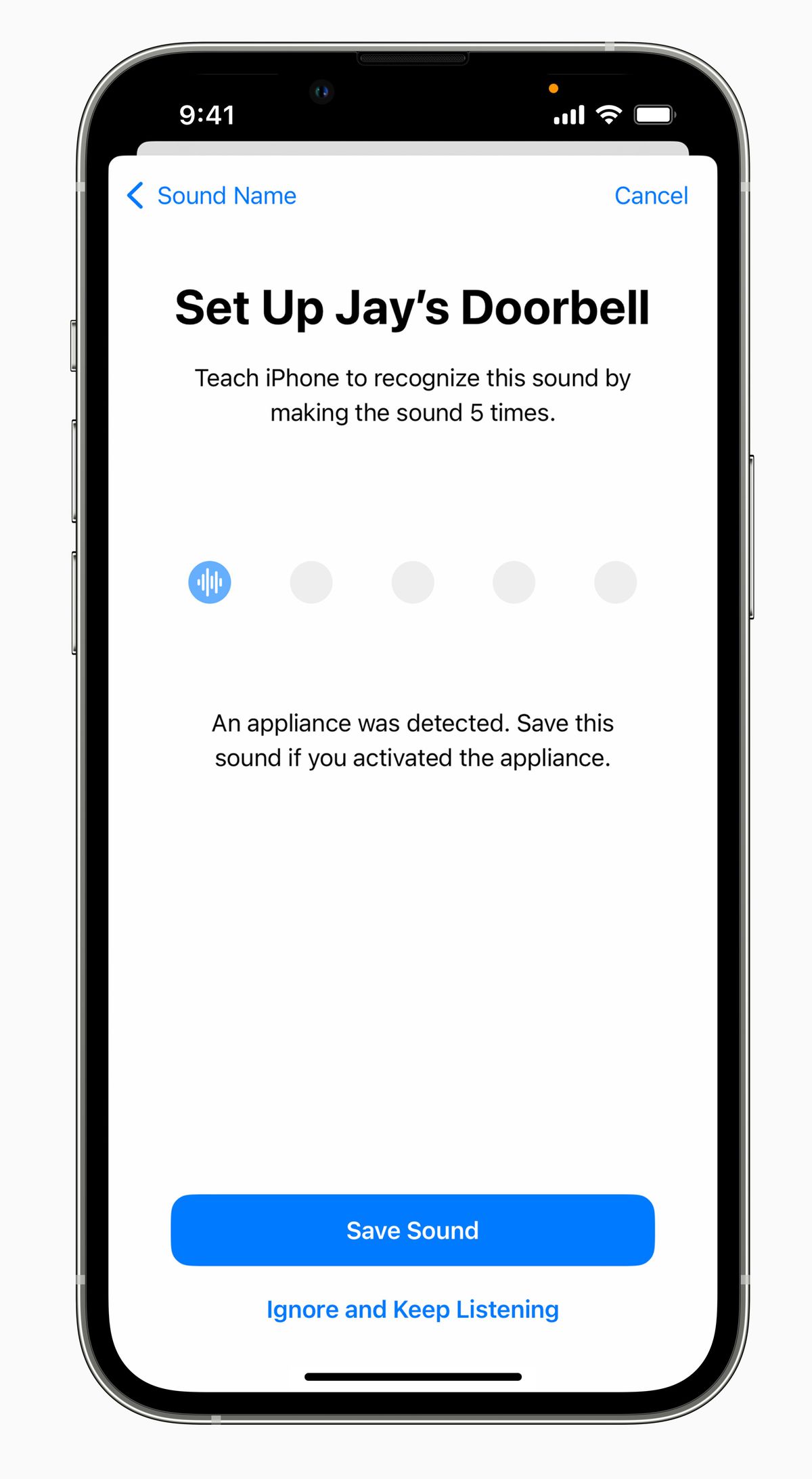

Apple rolled out Sound Recognition with iOS 14 to pick up on specific sounds like a smoke alarm or water running and alert users who may be deaf or hard of hearing. Soon, Sound Recognition will allow for tuning to allow customized recognition of sounds. As shown in this screenshot, it can listen for repeated alerts and learn to key on alerts that are specific to the user’s environment, like an unusual doorbell alert or appliance ding.

New enhancements to its VoiceOver screen reader app, Speak Selection, and Speak Screen features will add support for 20 new “locales and languages,” covering Arabic (World), Basque, Bengali (India), Bhojpuri (India), Bulgarian, Catalan, Croatian, Farsi, French (Belgium), Galician, Kannada, Malay, Mandarin (Liaoning, Shaanxi, Sichuan), Marathi, Shanghainese (China), Spanish (Chile), Slovenian, Tamil, Telugu, Ukrainian, Valencian, and Vietnamese. On the Mac, VoiceOver’s new Text Checker will scan for formatting issues like extra spaces or capital letters, while in Apple Maps, VoiceOver users can expect new sound and haptics feedback that will indicate where to start for walking directions.

At Apple, we design for accessibility from the ground up and we’re constantly innovating on behalf of our users. The cutting-edge features we are sharing today will offer new ways for people with disabilities to navigate, connect, and so much more. https://t.co/Zrhcng95QA

— Tim Cook (@tim_cook) May 17, 2022

Now, Apple says that on-device processing will use lidar sensors and the cameras on an iPhone or iPad for Door Detection. The new feature coming to iOS will help users find entryways at a new location, tell them where it is, and describe if it works with a knob or a handle as well as if it’s open or closed.

This is all going to be a part of the Detection Mode Apple is adding to Magnifier in iOS, which also collects existing features that let the camera zoom in on nearby objects and describe them or recognize people who are nearby and alert the user with sounds, speech, or haptic feedback. Using the lidar sensor means People Detection and Door Detection will require an iPhone Pro or iPad Pro model that includes the feature.

Another new feature on the way is Buddy Controller, which combines two game controllers into one so that a friend can help someone play a game, similar to the Copilot feature on Xbox.

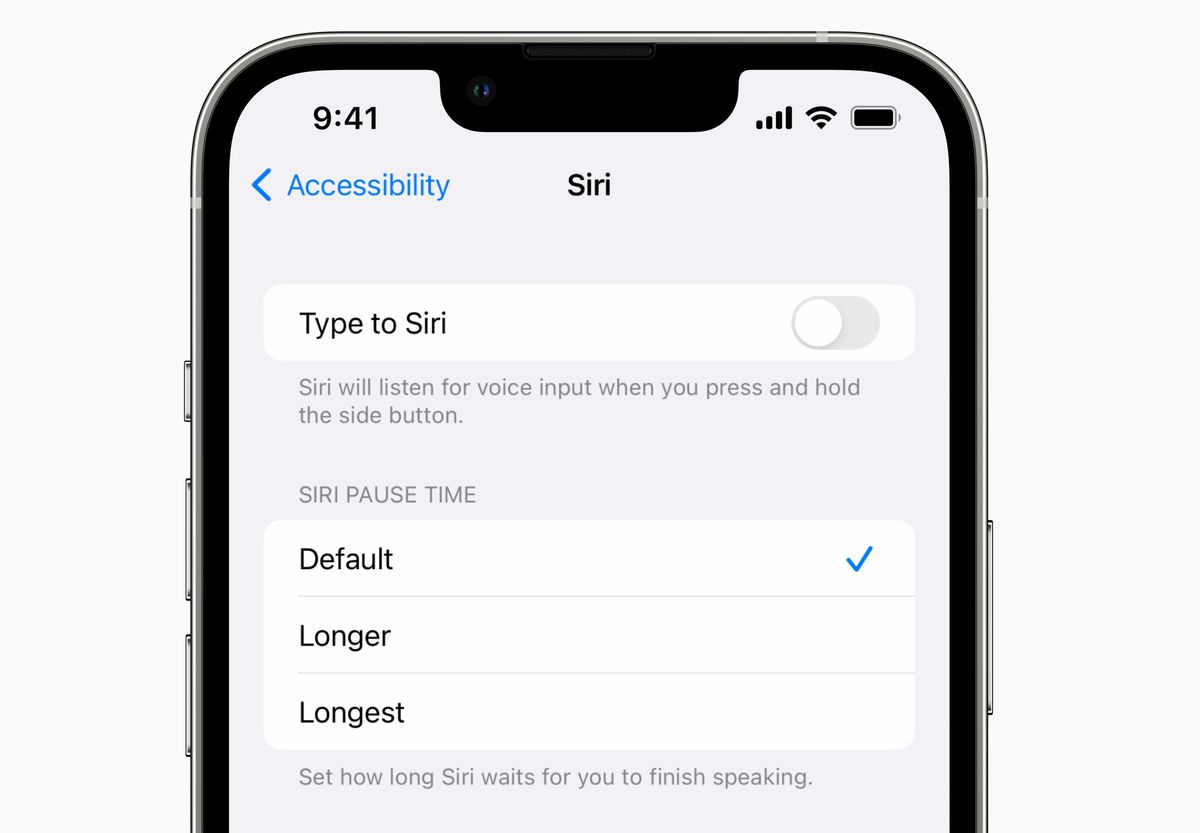

Finally, other tweaks include Voice Control Spelling Mode with letter-by-letter input, controls to adjust how long Siri waits to respond to requests, and additional visual tweaks for Apple Books that can bold text, change themes, or adjust the line, character, and word spacing to make it more readable.

The announcements are part of Apple’s recognition this week of Global Accessibility Awareness Day on May 19th. It notes that Apple Store locations will offer live sessions to help people find out more about existing features, and a new Accessibility Assistant shortcut will come to the Mac and Apple Watch this week to recommend specific features based on a user’s preferences.