Machine Learning could bring touch sensitive controls the basic AirPods and more

Apple wants a future iPhone or AirPods to watch the user and learn a user's movements with Machine Learning so that swiping on any device or surface can cause a reaction like turning volume up or down.

It sounds like a parlor trick. In a newly-granted patent, Apple wants devices to be able to pretend to have touch-sensitive controls. It stresses that this is for any device, but the repeated example is of earbuds where this could mean having a volume control where there is no volume control.

The patent is called "Machine-Learning Based Gesture Recognition," but gestures are only part of it. Apple's idea is that a device such as a wearable earbud could have other sensors, typically an optical or proximity one, but perhaps a temperature sensor or a motion one.

"However, an earbud and/or earphones may not include a touch sensor for detecting touch inputs and/or touch gestures," says Apple, "in view of size/space constraints, power constraints, and/or manufacturing costs."

If you haven't got a touch sensor, today, that's limiting. But, that's not stopping Apple.

"Nonetheless, it may be desirable to allow devices that do not include touch sensors, such as earbuds, to detect touch input and/or touch gestures from users," it continues. "[This proposal] enables a device that does not include a touch sensor to detect touch input and/or touch gestures from users by utilizing inputs received via one or more non-touch sensors included in the device."

So maybe your earbud has a microphone and it could pick up the sound of you tapping your finger on the device. Or it has an optical sensor and your finger blocks the light as you go to stroke the device.

Apple is being very thorough about just what sensors could conceivably be used — and used in conjunction with one another. "The sensors... may include one or more sensors for detecting device motion, user biometric information (e.g., heartrate), sound, light, wind, and/or generally any environmental input," it says.

"For example," it continues, "the sensors... may include one or more of an accelerometer for detecting device acceleration, one or more microphones for detecting sound and/or an optical sensor for detecting light."

The point is that on its own, any one sensor could be wrong. You might be scratching your ear right next to the microphone, for instance. Or the light is blocked because you're leaning against a wall.

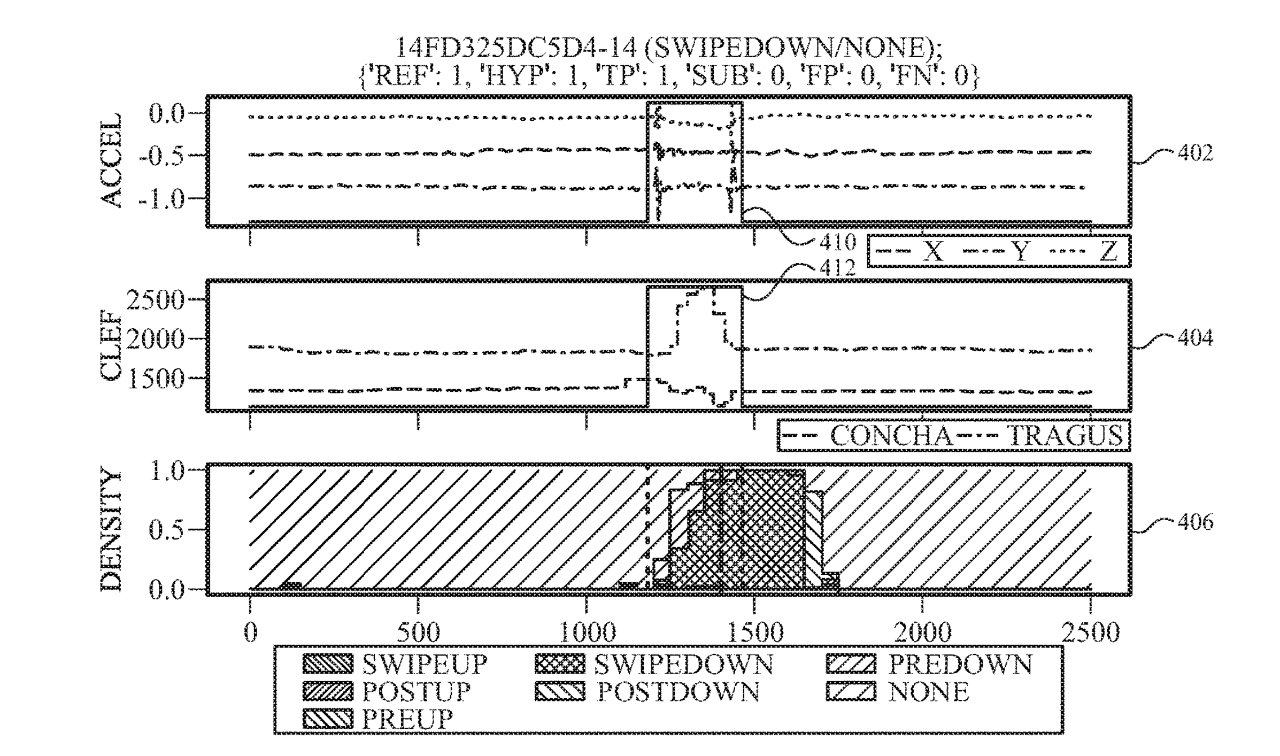

Apple's idea, and the reason this involves Machine Learning, is that it is the combination of sensors that can work together. "[The] inputs detected by the optical sensor, accelerometer, and microphone may individually and/or collectively be indicative of a touch input," says the patent.

"After training, the machine learning model generates a set of output predictions corresponding to predicted gesture(s)," continues Apple. So ML can learn that a scratching sound on the microphone isn't enough by itself, but when light into an optical sensor is blocked, something's happening.

As ever with patents, the descriptions are more about how something is detected than what will then be done with the information. In this case, though Apple does say that after such "predictions are generated, a policy may be applied to the predictions to determine whether to indicate an action for the wireless audio output device 104 to perform."

So if ML thinks a change in, say, three sensors, is significant, it can pass that on to software. That software can then, for one example, raise or lower volume on a device.

It sounds like Apple is cramming in many sensors into devices and has had to decide which one to leave out for lack of space. But by extension, if ML can learn from all of the sensors in a device, it can surely learn from every device a user has.

Consequently, if an AirPod detects a certain sound but the Apple Watch does not, then that sound is happening next to the earbud. It is therefore that much more likely that the user wants to do something with the AirPod.

This invention is credited to eight inventors. They include Timothy S. Paek, whose previous work for Apple includes having Siri take notes when you're on the phone.